The Math Behind RSA Keypair Encryption

How Keypair Encryption Works and the Role of Prime Numbers In the realm of digital security, keypair encryption is a … Continue Reading →

Vulnerability-Assessment-patching-Semi-Automation

Download / Git clone the script from the below URL, https://github.com/vinothkumarselvaraj/vulnerability-Assessment-patching-Semi-Automation.git vulnerability_Assessment_patch_pkg_render_v2.py: This python script will give you the package … Continue Reading →

Python Script to list the OpenStack Orphaned resource

Whenever we hard delete any Project without releasing its resources allocated to that project, then the resources mapped to the … Continue Reading →

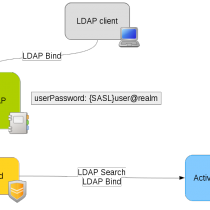

Pass-through OpenLDAP Authentication (Using SASL) to Active Directory on Centos

The idea is to ask OpenLDAP to delegate the authentication using the SASL protocol. Then the saslauth daemon performs the … Continue Reading →

“nova.compute.resource_tracker” Out of Sync!

Have you ever noticed the metrics shown in the “free -m” on the compute node, and the output of “Nova … Continue Reading →

OpenStack Bootcamp by Vinoth Kumar Selvaraj (Author)

I am pleased to inform you all that my book, titled “Openstack Bootcamp” is now available at amazon.com

My article published in July 2017 issue of OSFY Magazine

Pleased to share with you all that my article on “How to run OpenStack on AWS” has been published in … Continue Reading →

My article published in March 2017 issue of OSFY Magazine

Pleased to share with you all that my article on “Integrating OpenDaylight VTN Manager with OpenStack” has been published in … Continue Reading →

Guide for running OpenStack on AWS – A perfect way to do R&D on Openstack

Cloud on a cloud! Yes! You read it correctly. This post will guide you to install an OpenStack on … Continue Reading →

OpenDaylight VTN Manager Integration with OpenStack

Virtual Tenant Network (VTN) Technical Introduction: OpenDaylight Virtual Tenant Network (VTN) is an application that provides a multi-tenant virtual … Continue Reading →